XGBoost的GPU用法

使用Kaggle Notebook运行,59w的训练集,训练时间不到40s数据使用IEEE CIS比赛数据集代码如下:# # About this kernel## Before I get started, I just wanted to say: huge props to Inversion! The official starter kernel is **AWESO...

·

使用Kaggle Notebook运行,59w的训练集,训练时间不到40s

数据使用IEEE CIS比赛数据集

代码如下:

# # About this kernel

#

# Before I get started, I just wanted to say: huge props to Inversion! The official starter kernel is **AWESOME**; it's so simple, clean, straightforward, and pragmatic. It certainly saved me a lot of time wrangling with data, so that I can directly start tuning my models (real data scientists will call me lazy, but hey I'm an engineer I just want my stuff to work).

#

# I noticed two tiny problems with it:

# * It takes a lot of RAM to run, which means that if you are using a GPU, it might crash as you try to fill missing values.

# * It takes a while to run (roughly 3500 seconds, which is more than an hour; again, I'm a lazy guy and I don't like waiting).

#

# With this kernel, I bring some small changes:

# * Decrease RAM usage, so that it won't crash when you change it to GPU. I simply changed when we are deleting unused variables.

# * Decrease **running time from ~3500s to ~40s** (yes, that's almost 90x faster), at the cost of a slight decrease in score. This is done by adding a single argument.

#

# Again, my changes are super minimal (cause Inversion's kernel was already so awesome), but I hope it will save you some time and trouble (so that you can start working on cool stuff).

#

#

# ### Changelog

#

# **V4**

# * Change some wording

# * Prints XGBoost version

# * Add random state to XGB for reproducibility

# %% [code]

import os

import numpy as np

import pandas as pd

# %% [markdown]

# # Efficient Preprocessing

#

# This preprocessing method is more careful with RAM usage, which avoids crashing the kernel when you switch from CPU to GPU. Otherwise, it is exactly the same procedure as the official starter.

# %% [code]

!pip install datatable

# %% [code]

%%time

# (590540, 433)

# (506691, 432)

# CPU times: user 40.4 s, sys: 11.5 s, total: 51.9 s

# Wall time: 52 s

# import datatable as dt

# train_transaction =dt.fread("../input/train_transaction.csv").to_pandas()

# test_transaction = dt.fread('../input/test_transaction.csv', index_col='TransactionID').to_pandas()

# train_identity = df.fread('../input/train_identity.csv', index_col='TransactionID').to_pandas()

# test_identity = dt.fread('../input/test_identity.csv', index_col='TransactionID').to_pandas()

import pandas as pd

train_transaction = pd.read_csv('../input/ieee-fraud-detection/train_transaction.csv', index_col='TransactionID')

test_transaction = pd.read_csv('../input/ieee-fraud-detection/test_transaction.csv', index_col='TransactionID')

train_identity = pd.read_csv('../input/ieee-fraud-detection/train_identity.csv', index_col='TransactionID')

test_identity = pd.read_csv('../input/ieee-fraud-detection/test_identity.csv', index_col='TransactionID')

sample_submission = pd.read_csv('../input/ieee-fraud-detection/sample_submission.csv', index_col='TransactionID')

train = train_transaction.merge(train_identity, how='left', left_index=True, right_index=True)

test = test_transaction.merge(test_identity, how='left', left_index=True, right_index=True)

#这里用datatable想办法加速处理下~!!!!!!!!!!

# %% [code]

import numpy as np

train['Transaction_hour'] = np.floor(train['TransactionDT'] / 3600) % 24

test['Transaction_hour'] = np.floor(test['TransactionDT'] / 3600) % 24

# train_one_column=np.floor(train['TransactionDT']*1.0 % 86400) / 3600#pandas的DataFrame->Series

# test_one_column =np.floor(test['TransactionDT']*1.0 % 86400) / 3600#pandas的DataFrame->Series

del train["TransactionDT"]

del test["TransactionDT"]

# train["TransactionDT"]=train_one_column#末尾追加一列

# test["TransactionDT"]=test_one_column#末尾追加一列

train.head(10)

# print(train_one_column)

# df=pd.DataFrame(one_column)

# df.columns.name = 'TransactionDF_hour'

# #Series->pandas的DataFrame

# one_column_dict={col:df[col].tolist() for col in df.columns} #pandas的DataFrame->dict

# one_column=dt.Frame(one_column_dict)#dict->datatable的DataFrame

# %% [code]

print(train.shape)

print(test.shape)

y_train = train['isFraud'].copy()

del train_transaction, train_identity, test_transaction, test_identity

# Drop target, fill in NaNs

X_train = train.drop('isFraud', axis=1)

X_test = test.copy()

del train, test

X_train = X_train.fillna(-999)

X_test = X_test.fillna(-999)

# %% [code]

%%time

# CPU times: user 49.9 s, sys: 380 ms, total: 50.3 s

# Wall time: 50.8 s

# Label Encoding

from sklearn import preprocessing

for f in X_train.columns:

if X_train[f].dtype=='object' or X_test[f].dtype=='object':

lbl = preprocessing.LabelEncoder()

lbl.fit(list(X_train[f].values) + list(X_test[f].values))

X_train[f] = lbl.transform(list(X_train[f].values))

X_test[f] = lbl.transform(list(X_test[f].values))

# %% [code]

import gc

gc.collect()

# %% [markdown]

# # Training

#

# To activate GPU usage, simply use `tree_method='gpu_hist'` (took me an hour to figure out, I wish XGBoost documentation was clearer about that).

# %% [code]

import xgboost as xgb

clf = xgb.XGBClassifier(

n_estimators=500,

max_depth=9,

learning_rate=0.05,

subsample=0.9,

colsample_bytree=0.9,

missing=-999,

random_state=2019,

tree_method='gpu_hist' # THE MAGICAL PARAMETER

)

# %% [markdown]

# GPU_hist-0.9355

# GPU_exact-0.9331

# %% [code]

%time clf.fit(X_train, y_train)

# %% [markdown]

# Some of you must be wondering how we were able to decrease the fitting time by that much. The reason for that is not only we are running on gpu, but we are also computing an approximation of the real underlying algorithm (which is a greedy algorithm).

#

# This hurts your score slightly, but as a result is much faster.

#

# So why am I not using CPU with `tree_method='hist'`?

# If you try it out yourself, you'll realize it'll take ~ 7 min, which is still far from the GPU fitting time.

# Similarly, `tree_method='gpu_exact'` will take ~ 4 min,

# but likely yields better accuracy than `gpu_hist` or `hist`.

#

# The [docs on parameters](https://xgboost.readthedocs.io/en/latest/parameter.html) has a section on `tree_method`, and it goes over the details of each option.

# %% [code]

sample_submission['isFraud'] = clf.predict_proba(X_test)[:,1]

sample_submission.to_csv('simple_xgboost-gpu-exact.csv')

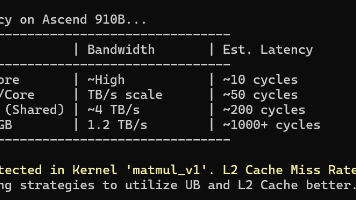

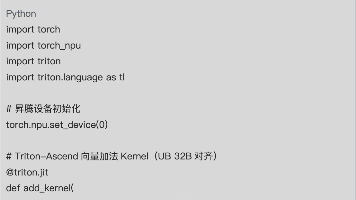

昇腾计算产业是基于昇腾系列(HUAWEI Ascend)处理器和基础软件构建的全栈 AI计算基础设施、行业应用及服务,https://devpress.csdn.net/organization/setting/general/146749包括昇腾系列处理器、系列硬件、CANN、AI计算框架、应用使能、开发工具链、管理运维工具、行业应用及服务等全产业链

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)